AI Isn’t a Friend: Teach Your Child the Difference

Artificial Intelligence (AI) is becoming a regular conversation partner for kids—in chatbots and smart speakers. This includes platforms like ChatGPT, Google Gemini, Amazon Alexa, Microsoft Copilot, Snapchat My AI, and others.

They aren’t human, but children often treat them like real companions. AI can seem caring and understanding. It answers questions, makes jokes, and offers encouragement. But behind it is not a person—it’s an algorithm. It’s important to explain to your child: AI is not a friend, and it should be used with care.

🤖 Why Kids Believe the Chatbot Is “Real”

Children naturally give feelings and personalities to objects. They talk to toys or create imaginary friends; it’s a normal part of growing up. But AI makes things trickier: it responds in real time, matches the mood, and speaks like a real person.

This is called an “empathy gap”—when something feels emotionally real, but is actually just a set of patterns.

AI can use your child’s name, speak their language, and respond to emotions. This creates a feeling of closeness and trust, especially if a child is lacking emotional support in real life.

⚠️ What the Risks Are

At first glance, chatting with a bot may seem like harmless fun. But the younger the child, the harder it is for them to tell the difference between real connection and imitation. And when trust is involved, especially with personal topics, there are risks parents should be aware of.

Encouraging risky behavior

AI may accidentally support a dangerous idea because its goal is to be friendly. It might encourage a child who talks about running away or hurting themselves, rather than stopping the conversation.

Illusion of care

AI might seem understanding, but it can’t tell when a child is actually upset. It doesn’t worry, it doesn’t care—it just responds.

Loss of critical thinking

AI speaks confidently, and younger kids may believe it without question. They might not understand that bots can make mistakes, imagine things, or present fiction as fact.

Over-reliance on AI conversations

If a child lacks emotional support, they may start turning to a bot more and more, and grow distant from real conversations with friends or family. Some may begin to hide their feelings, look for comfort in the bot, and shut others out.

Crossing boundaries

Bots adapt to the way your child talks. They might joke, flirt, or play along without understanding context or age-appropriate communication.

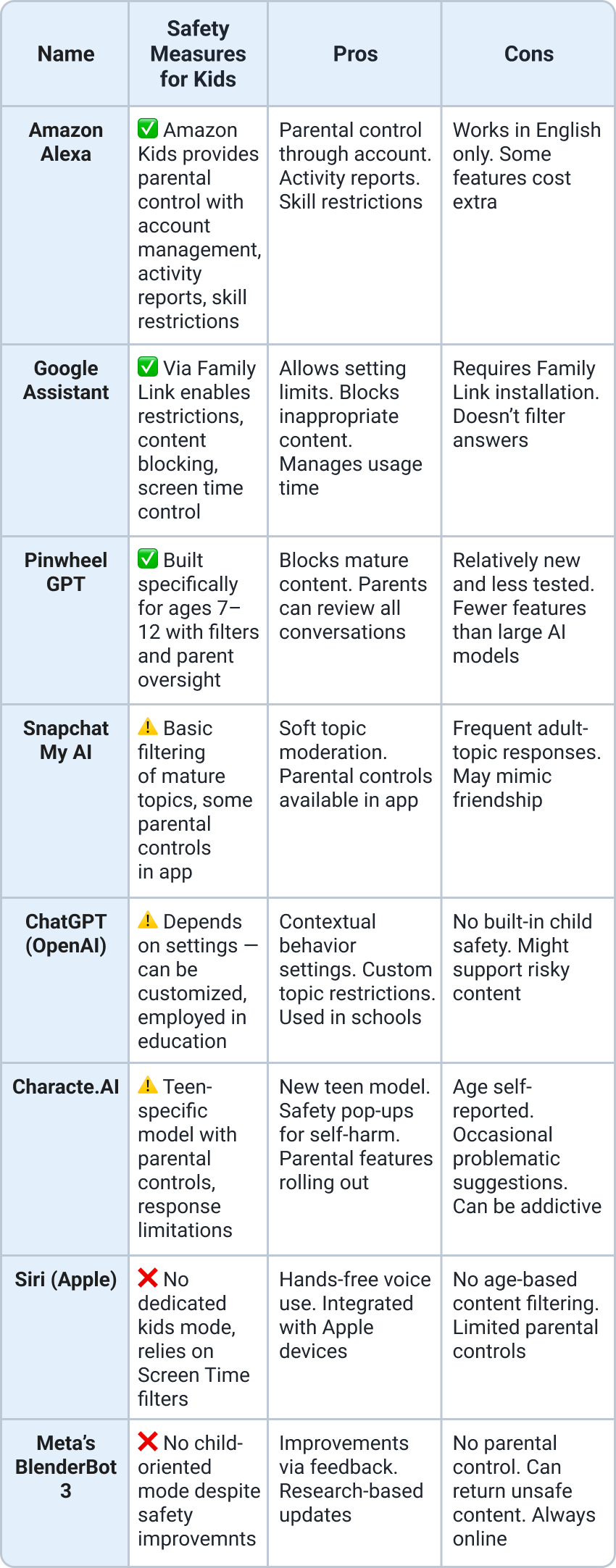

🛡️ Which AI Platforms Consider Child Safety

Some AI tools have already added safety measures for interacting with kids. Here’s what parents should know:

🗣️ How to Talk to Your Child About Artificial Intelligence

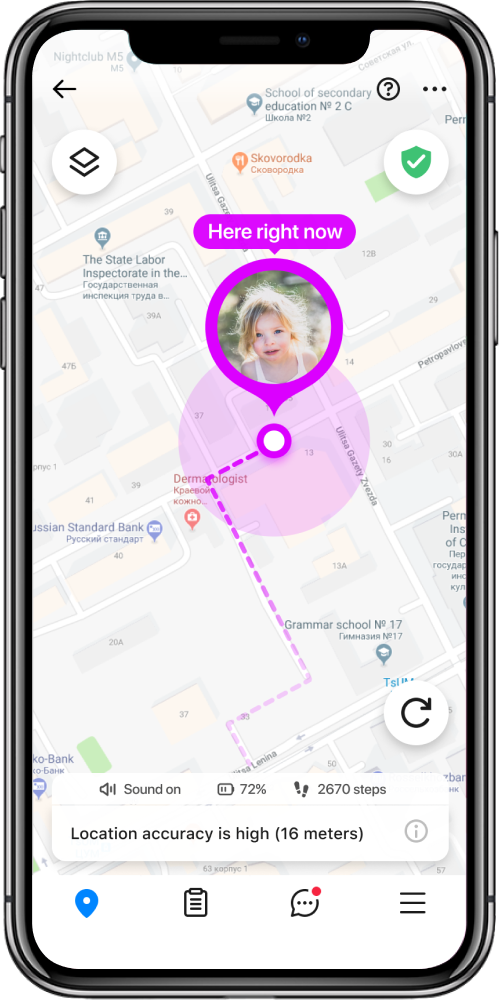

AI has become part of children’s lives, and our role isn’t to ban it, but to teach them how to use it safely. The key is to talk calmly, with curiosity and respect.

- Ask without judgment: “Hey, which bots do you talk to? What do they say? Do you like them?” This kind of calm curiosity helps your child feel safe opening up and sharing their thoughts.

- Explain in simple words: “It might say things like ‘I understand you, you’re not alone,’ but it doesn’t actually know who you are. It just picks the words that seem to fit. It’s not a friend, it’s a program.” For younger children, you can compare it to a parrot or an echo: “It repeats things, but it doesn’t understand.”

- Help them set boundaries: “If you’re feeling sad, let’s talk about it together. A bot won’t understand if something really hurts.” Also explain that they shouldn’t share their address, last name, or friends’ names, even if the bot seems “nice.”

- Build critical thinking: “Do you think that’s true? Where could we check?” Read the bot’s answers together, spot mistakes, search online for different perspectives. You can turn it into a game: “What would a real person say?”

AI is just a tool. It can be helpful, interesting, even fun. But it can’t feel emotions, doesn’t understand consequences, and will never replace real human connection.

To keep your child safe, you don’t need to ban everything. You just need to be there to explain, to guide, and to support.

References:

- 2025 Common Sense Census: Media Use by Kids Age 0–18, Common Sense Media, 2025

- How to Talk With Your Kids About AI Companion Bots, KQED, 2025

- AI Chatbots and Companions — Risks to Children and Young People, eSafety Commissioner, Australia, 2024

- AI Chatbots have shown they have an ‘empathy gap’ that children are likely to miss, University of Cambridge, 2024

- ‘No Alexa, no’: designing child-safe AI and protecting children from the risks of the ‘empathy gap’ in large language models, Learning, Media and Technology, 2024

- AI Chatbots for Kids: A New Imaginary Friend or Foe?, Institute for Family Studies, 2024

Проверьте электронный ящик